“The Evolution of NVIDIA Chips: A Journey from Graphics to AI and Beyond” by nehalmr

NVIDIA, a pioneer in the field of graphics processing units (GPUs), has come a long way since its inception in 1993. From humble beginnings with the NV1, the company has consistently pushed the boundaries of innovation, transforming the landscape of computing and AI. This article delves into the history and details of NVIDIA chips, exploring their evolution, key milestones, and applications.

Early Years (1993–1999)

NVIDIA’s first product, the NV1, was released in 1995. Although it did not gain significant traction, it laid the foundation for future developments. The NV4, released in 1997, marked a turning point with its improved driver support, which became a crucial factor in the company’s success. The NV5, launched in 1999, introduced significant updates, including 32-bit Z-buffer/stencil support and up to 32MB of VRAM[7].

GeForce Era (1999–2001)

The GeForce 256, released in late 1999, was a groundbreaking product that cemented NVIDIA’s position in the market. Dubbed the “world’s first GPU,” it boasted improved pixel pipelines and a significant performance boost over its predecessors. The GeForce 256 supported up to 64MB of DDR SDRAM and operated at 166MHz, making it 50% faster than the NV5[7]. This marked the beginning of the GeForce brand, which would become synonymous with high-performance graphics.

GeForce2 and GeForce3 (2000–2002)

The GeForce2 series, launched in the early 2000s, introduced support for multi-monitor setups and higher clock rates. The GeForce3, released shortly after, was the first DirectX 8 compatible GPU, featuring programmable pixel and vertex shaders, and multisample anti-aliasing[7].

GeForce FX Series (2002–2004)

The GeForce FX series, launched in 2002, represented a significant leap forward in graphics processing. These GPUs were designed for high-performance gaming and featured advanced technologies such as pixel and vertex shaders, and advanced anti-aliasing techniques[7].

CUDA and GPU Computing (2007–2010)

NVIDIA’s introduction of CUDA in 2007 revolutionized GPU computing. CUDA enabled developers to harness the parallel processing capabilities of GPUs for tasks beyond graphics rendering, such as scientific simulations, data analytics, and machine learning. This marked a significant shift in the company’s focus, from solely graphics processing to a broader range of applications[6].

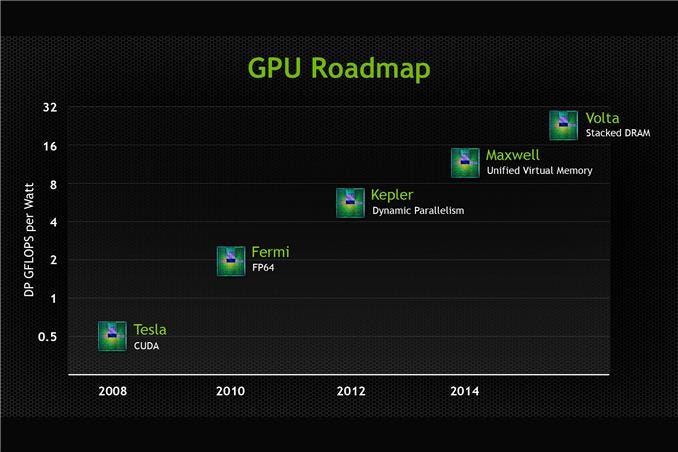

Kepler and Maxwell (2012–2016)

The Kepler and Maxwell architectures, released in 2012 and 2014, respectively, further solidified NVIDIA’s position in the market. These GPUs introduced significant improvements in power efficiency, performance, and memory bandwidth, making them ideal for datacenter and cloud computing applications.

Pascal and Volta (2016–2018)

The Pascal and Volta architectures, launched in 2016 and 2017, respectively, brought significant advancements in AI and deep learning capabilities. Pascal introduced the first GPU-based AI accelerator, while Volta introduced the Tensor Cores, which accelerated deep learning tasks by up to 12 times[6].

Turing and Ampere (2018–2022)

https://developer.nvidia.com/blog/wp-content/uploads/2018/09/image12.jpg

The Turing and Ampere architectures, released in 2018 and 2020, respectively, continued NVIDIA’s momentum in AI and deep learning. Turing introduced the RT Cores for real-time ray tracing and AI-enhanced graphics, while Ampere introduced the second-generation Tensor Cores and improved power efficiency.

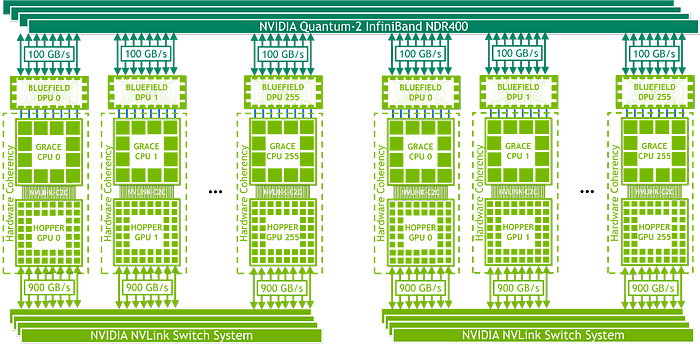

NVIDIA Grace and Hopper (2022-Present)

The NVIDIA Grace and Hopper architectures, launched in 2022, represent a significant departure from traditional GPU design. The NVIDIA Grace CPU Superchip, a datacenter CPU developed in collaboration with Arm, combines two high-performance CPUs with server-class LPDDR5X memory and NVLink-C2C interconnects. The NVIDIA Hopper GPU, released alongside the Grace CPU, features improved performance and power efficiency[3].

Applications and Impact

NVIDIA chips have had a profound impact across various industries, including:

1. Gaming: NVIDIA’s GPUs have consistently pushed the boundaries of gaming performance, enabling immersive and realistic graphics experiences.

2. Artificial Intelligence: NVIDIA’s AI technologies, including CUDA, cuDNN, and TensorRT, have enabled the development of AI applications in fields such as healthcare, finance, and autonomous vehicles.

3. Data Centers: NVIDIA’s datacenter GPUs and CPUs have enabled the development of AI and deep learning workloads, as well as high-performance computing and cloud computing applications.

4. Autonomous Vehicles: NVIDIA’s Drive platform, featuring the Drive Atlan system-on-a-chip, has enabled the development of AI-defined vehicles capable of processing large volumes of sensor data and making real-time decisions[1][5].

Conclusion

NVIDIA’s journey from humble beginnings to its current position as a leader in AI and deep learning is a testament to the company’s commitment to innovation and excellence. From the early days of graphics processing to the current era of AI and datacenter computing, NVIDIA chips have consistently pushed the boundaries of what is possible. As the company continues to evolve and innovate, it is clear that its impact will be felt for generations to come.

Citations:

[1] https://www.nvidia.com/en-in/self-driving-cars/data-center/

[2] https://www.nvidia.com/en-in/

[3] https://developer.nvidia.com/blog/nvidia-grace-cpu-superchip-architecture-in-depth/

[4] https://www.nvidia.com/en-in/self-driving-cars/

[5] https://nvidianews.nvidia.com/news/nvidia-unveils-nvidia-drive-atlan-an-ai-data-center-on-wheels-fornext-gen-autonomous-vehicles

[6] https://www.aibrilliance.com/blog/the-crucial-role-of-nvidia-chips-in-shaping-next-gen-ai

[7] https://www.pocket-lint.com/nvidia-gpu-history/

[8] https://www.nvidia.com/en-in/ai-data-science/

[9] https://www.nvidia.com/content/gtc-2010/pdfs/2275_gtc2010.pdf

[10] https://www.nvidia.com/en-in/technologies/

[11] https://www.britannica.com/topic/NVIDIA-Corporation

[12] https://developer.nvidia.com/content/life-triangle-nvidias-logical-pipeline

[13] https://developer.nvidia.com/gpugems/gpugems2/part-iv-general-purpose-computation-gpus-primer/chapter-30-geforce-6-series-gpu

[14] https://www.studio1productions.com/Articles/NVidia-GPU-Chart.htm

[15] https://www.cio.com/article/646471/how-nvidia-became-a-trillion-dollar-company.html

[16] https://www.nvidia.com/content/dam/en-zz/Solutions/industries/public-sector/govt-affairs/public-sector-pdf-government-affairs-rfi-2589388.pdf

[17] https://www.untaylored.com/post/exploring-nvidia-s-business-model-and-revenue-streams

[18] https://images.nvidia.com/aem-dam/Solutions/Data-Center/l4/nvidia-ada-gpu-architecture-whitepaper-v2.1.pdf

[19] https://www.nvidia.com/content/PDF/nvidia-ampere-ga-102-gpu-architecture-whitepaper-v2.pdf